2018 Ballotready "make a plan to vote" impact analysis

Did the BallotReady “Make a Plan to Vote” tool increase voter turnout during the 2018 midterm elections?

Program design by Debra Cleaver (VoteAmerica) and Alex Niemczewski (BallotReady). This study was funded by the Arnold Foundation. Experiment design and analysis by Enrico Cantoni (Assistant Professor, Bologna University), Donghee Jo (Assistant Professor, Northeastern University), Cory Smith (PhD Candidate, MIT). This was originally published at this URL: https://osf.io/5wxqc

Abstract

In this study, we aim to experimentally test the effectiveness of the NCO/BallotReady information and turnout tool on electoral participation. BallotReady is a non-partisan social enterprise that runs a website, BallotReady.org; the website provides information about every candidate in upcoming elections. This year, BallotReady partnered with the voter technology non-profit Vote.org to create a co-branded website with voter information, including a vote-planning “Make a Plan to Vote” tool or MAPTV. We study a clustered randomized control trial (RCT) of BallotReady users in the 2018 US midterm elections. Users were randomly allocated to visiting the MAPTV version of the site (treatment) or the default, information-only BallotReady page (control). While users of both tools turned out at high rates well above the national average (74%), matching users to voter files based on address, we find no statistically significant effect of the MAPTV tool on turnout. Point estimates of the effect are generally positive although small in magnitude. We consider several reasons why the intervention may have had only small observable effects.

Introduction

Civic participation is the cornerstone of the democracy. As such, policy makers, academic ex- perts, and activists have devoted their attention to discovering ways of increasing turnout. In recent elections, many politicians and political organizations have promoted various behavioral interventions. One technique which has become increasingly common is to explicitly ask voters to plan the steps they will take to vote. National political campaigns in both the United States and other countries have used this approach: in the 2012 US presidential election, President Obama’s campaign instructed canvassers to ask specific questions about voters’ plans.[1] Similarly, in the 2017 UK parliamentary election, Prime Minister Theresa May sent an email to all members of the Conservative Party urging everyone to vote in the upcoming election. Included in the email was a link to a webpage which prompted voters to make a specific election day plan, asking when and with whom they planned to vote.[2]

In this study, we aim to experimentally test the effectiveness of the BallotReady’s information and turnout tool, where the behavioral interventions described above are combined. BallotReady is a non-partisan social enterprise that runs a website, BallotReady.org; the website provides information about every candidate in upcoming elections. Users who come to the BallotReady site type in their address to view every race and referendum that they will see in the voting booth. From there, voters can compare candidates based on stances on issues, biography, bar association recommendations, news articles, and endorsements. Once they have made a decision, voters save their choices to print out or pull up on their phone in the voting booth. BallotReady has also introduced a turnout tool—“Make a Plan to Vote (MAPTV)”—designed to incorporate the insights of the above-described papers. The tool (1) asks specific questions to nudge the users to make the plan to vote, (2) attracts users to sign up for the reminders, and (3) provides a platform that the users can easily share the intent to vote with their friends in social network websites.

We recruited a sample of 119,211 voters for the 2018 US midterm elections who were ran- domly chosen to be either directed to the MAPTV tool or to the default, information-only Bal- lotReady page. By matching their search addresses to voter files, we record voter characteristics and midterm turnout. After dropping users whose addresses could not be matched, we retain a sample of 66,195 users. Overall, treatment and control groups showed no statistically significant differences in turnout. Point estimates of the MAPTV effect are generally positive but small in magnitude. We consider several reasons for the lack of a discernible effect, including the high voting propensity of BallotReady users, similar effectiveness between both BallotReady experiences, attenuation due to low takeup or poor matching, or general ineffectiveness of the MAPTV tool.

This study relates to a large literature of other voter turnout interventions. The most closely related ones have focused on voting plans, most notably Nickerson [2008] in which implemented a randomized intervention, nudging people to make specific plan for the election. Phone calls asking questions “designed to facilitate voting plan making” increased turnout by 4 percentage points on average and 9 percentage points for the single-eligible-voter households. However, other similar interventions failed to replicate these results, e.g.Cho [2008].

Other behavioral “nudges” have also been suggested to enhance voter participation. For in- stance, reminders are shown to be effective. Dale and Strauss [2009]sent text message reminders to voters, which increased the turnout of the treatment group by 3 percentage points compared to the control group. The effect is later corroborated by a replication study Malhotra et al. [2011].

Social network interventions are also effective. Voters who received Facebook messages encouraging them to vote had their turnout increased by 8 percentage points Teresi and Michelson [2015]. Facebook users who were prompted with the notification that contains the list of the friends who voted for an election were 0.4% more likely to vote compared with the users who got the information-only treatment Bond et al. [2012].

2 Experimental Design

We recruited 119,211 for the BallotReady website through Facebook advertising. Upon entering the BallotReady landing page, users were prompted to enter their address in order to find local ballot information. Once they did so, they were randomized to either the MAPTV page (treat- ment) or the default, information-only BallotReady page (control). Since we are typically only able to identify users based on their address, we conducted the randomization at the address level, applying the same treatment status to any user who entered the same address that had previously been submitted. Notably, since randomization occurred after users entered their address, there cannot be differential sample attrition at the address level; treatment and control differences only begin once we observe the address.

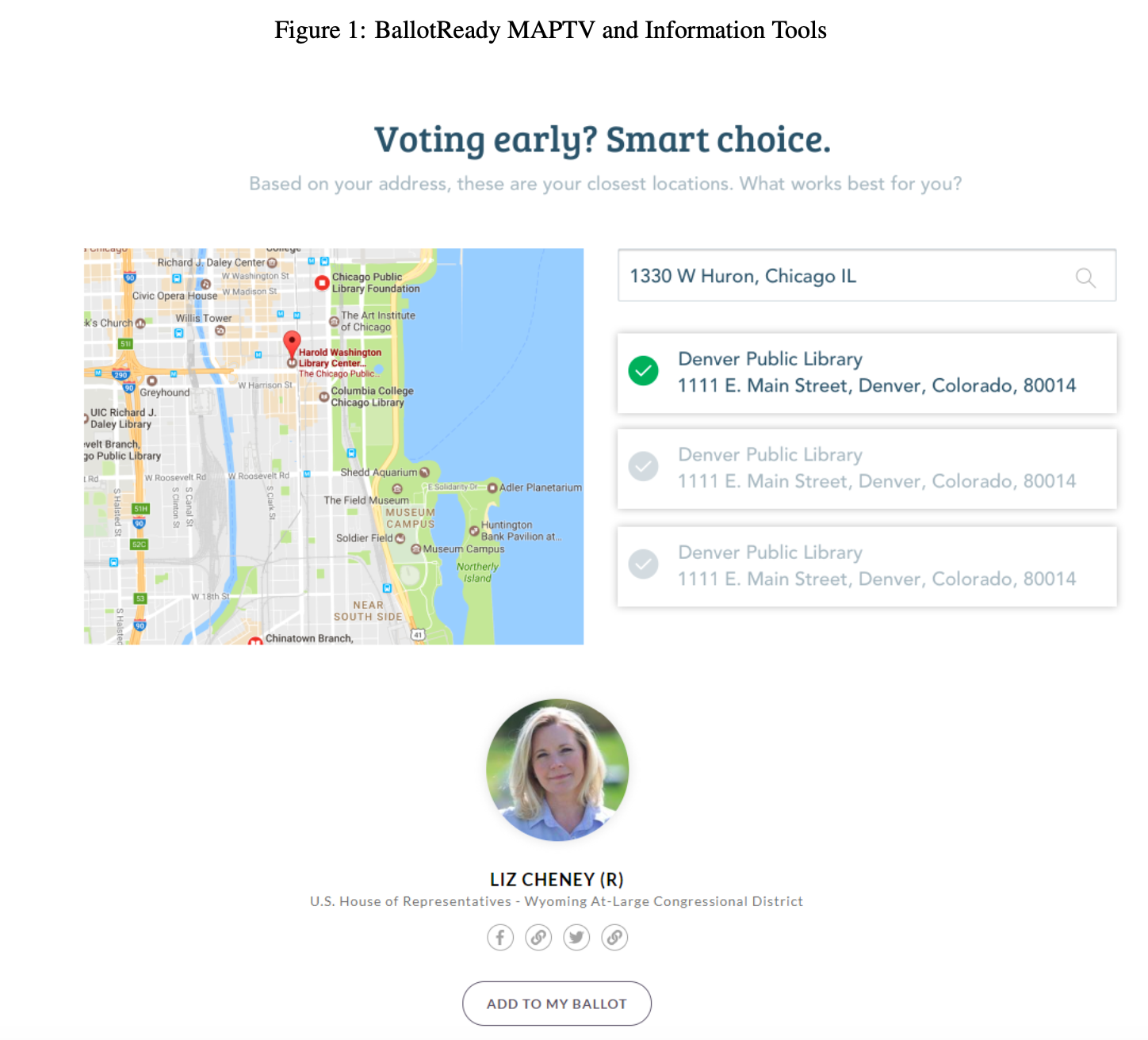

On the MAPTV page, users were shown information about their polling station hours and location and were then prompted to plan how they would vote. Users would select a particular time they wanted to vote and, optionally, signed up for text message or e-mail reminders on election day. Finally, users could return to the information section of the BallotReady website. The information-only BallotReady page contains down-the-ballot lists of candidates for each election and, wherever possible, includes biographical details, positions on political issues, and endorsements. Examples of both pages are shown in Figure 1.

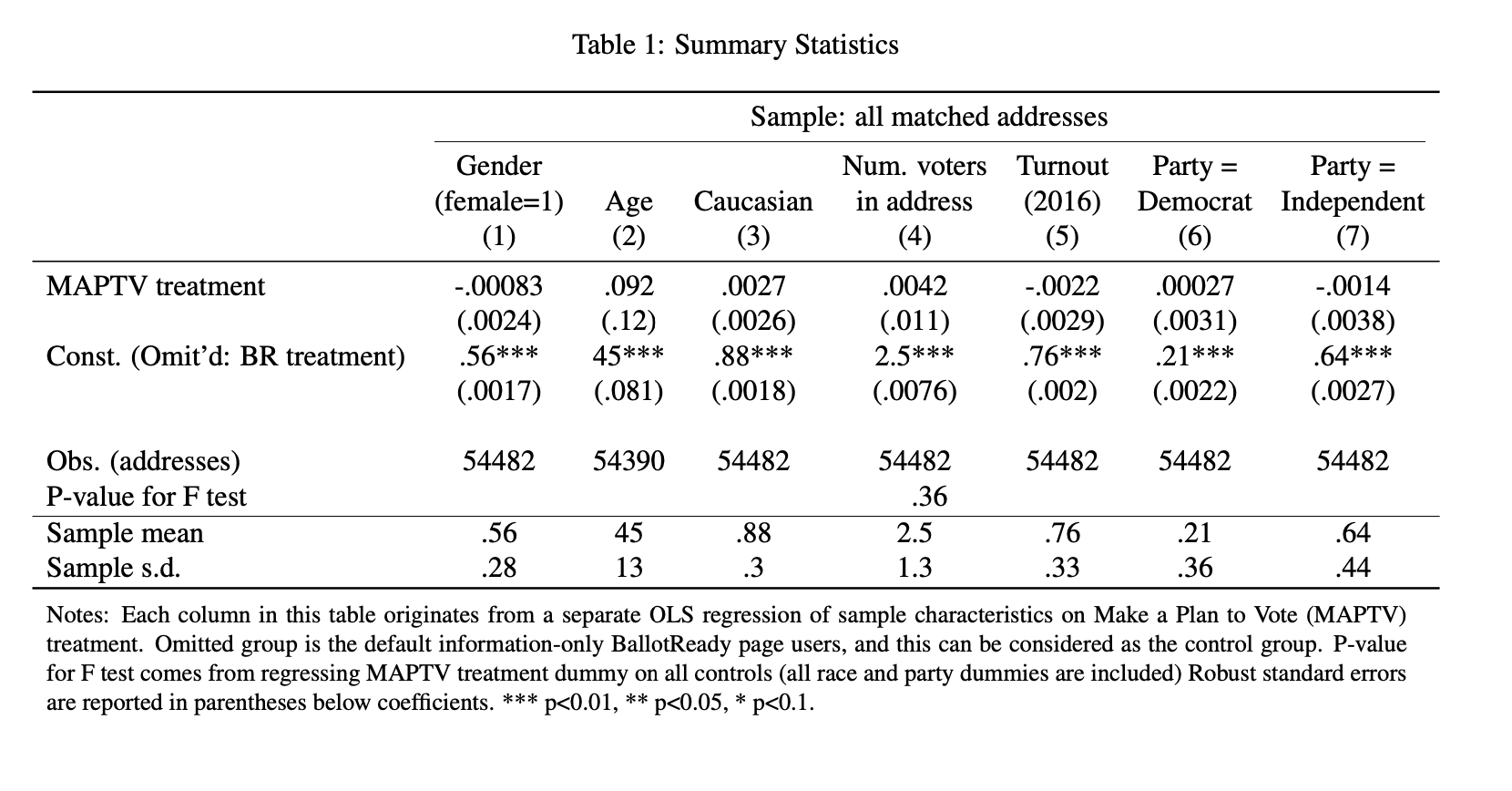

After voter files were updated for the 2018 midterm elections, we matched users to the Tar- getSmart voter file database by providing TargetSmart with user information and downloading their matched voters. Since a small number of users made permanent BallotReady accounts and provided e-mail addresses, we attempted to first match with this information as specified in our pre-analysis plan. If no e-mail was provided, we attempted to match on address, a procedure that could potentially return multiple matches per user for multi-voter households. Of the 119,211, we successfully matched 66,195 which yielded 135,183 voters. Collapsing characteristics at the address level,3 we report differences on observable characteristics between the treatment and control households in Table 1. Across seven different demographic characteristics, we find no statistically significant differences between the two groups and the point estimates are all small in magnitude, providing support for a balanced sample.

3 Analysis

There are a number of potential ways to conduct our analysis given the multiple matches returned from voter files. In general, we would want each user’s experience to receive the same weight regardless of the number of matches. Thus, as specified in our pre-analysis plan, the unit of observation in the regression will be an individual voter but the weight will be equal to the number of BallotReady users at their address divided by the number of matches. So, a uniquely- matched user would receive a weight of 1 while a user who was matched to a two-voter household would be represented by two voters each with a weight of 0.5. We additionally specified that our OLS regression controls would be:

- State interacted with party dummies interacted with non-interacted dummies for 2016 general election turnout, 2014 general midterm turnout, 2012 general election turnout, 2010 midterm turnout, 2008 general election turnout (7 × 5 × 4 = 140 variables)

- State interacted with age and age2

- State interacted with number of registered voters at address

- State interacted with (imputed) gender variables interacted with (imputed) race variables interacted with party dummies

- Standard errors will be clustered by address as this is the level at which treatment is as- signed

As a robustness check, we also pre-specified that we would analyze results with the “post-double- selection” LASSO method of Belloni et al. [2014]. We would select from a larger set of potential controls including:

- State interacted with all combinations of individual-level turnout variables 2016 general election turnout, 2014 general midterm turnout, 2012 general election turnout, 2010 midterm turnout, 2008 general election turnout interacted with party dummies (7 × 32 × 4 = 896 variables)– Party dummies will be coded into four categories where possible: Democrat, Repub- lican, Independent, and Other

- State interacted with age and age

- State interacted with number of registered voters at address

- County dummies interacted with(imputed)gender variables interacted with(imputed)race variables interacted with party dummies

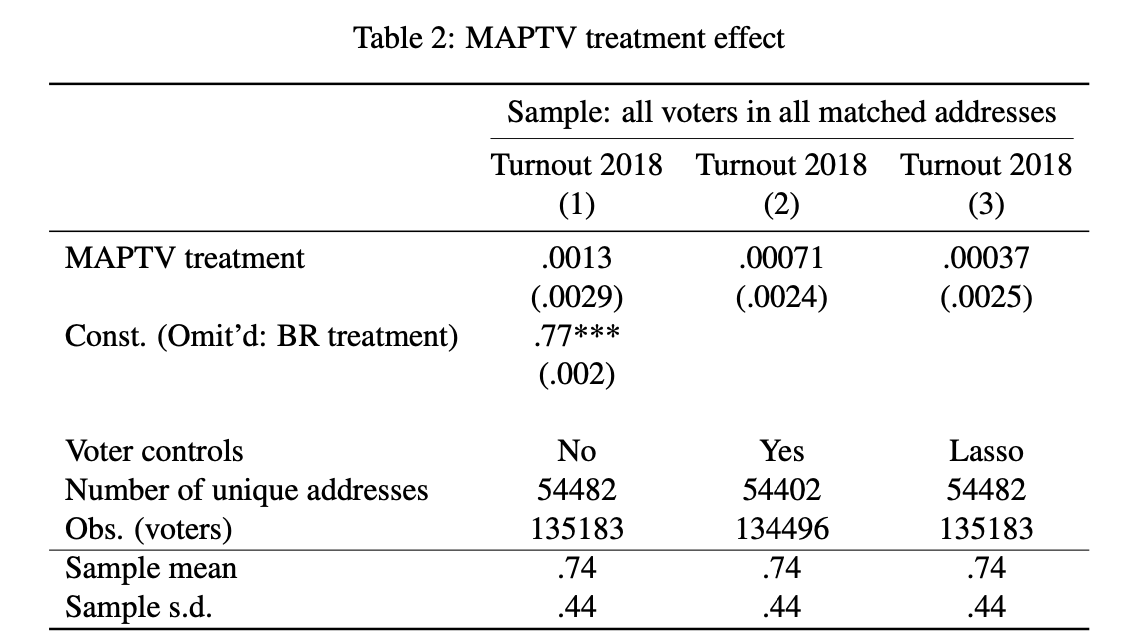

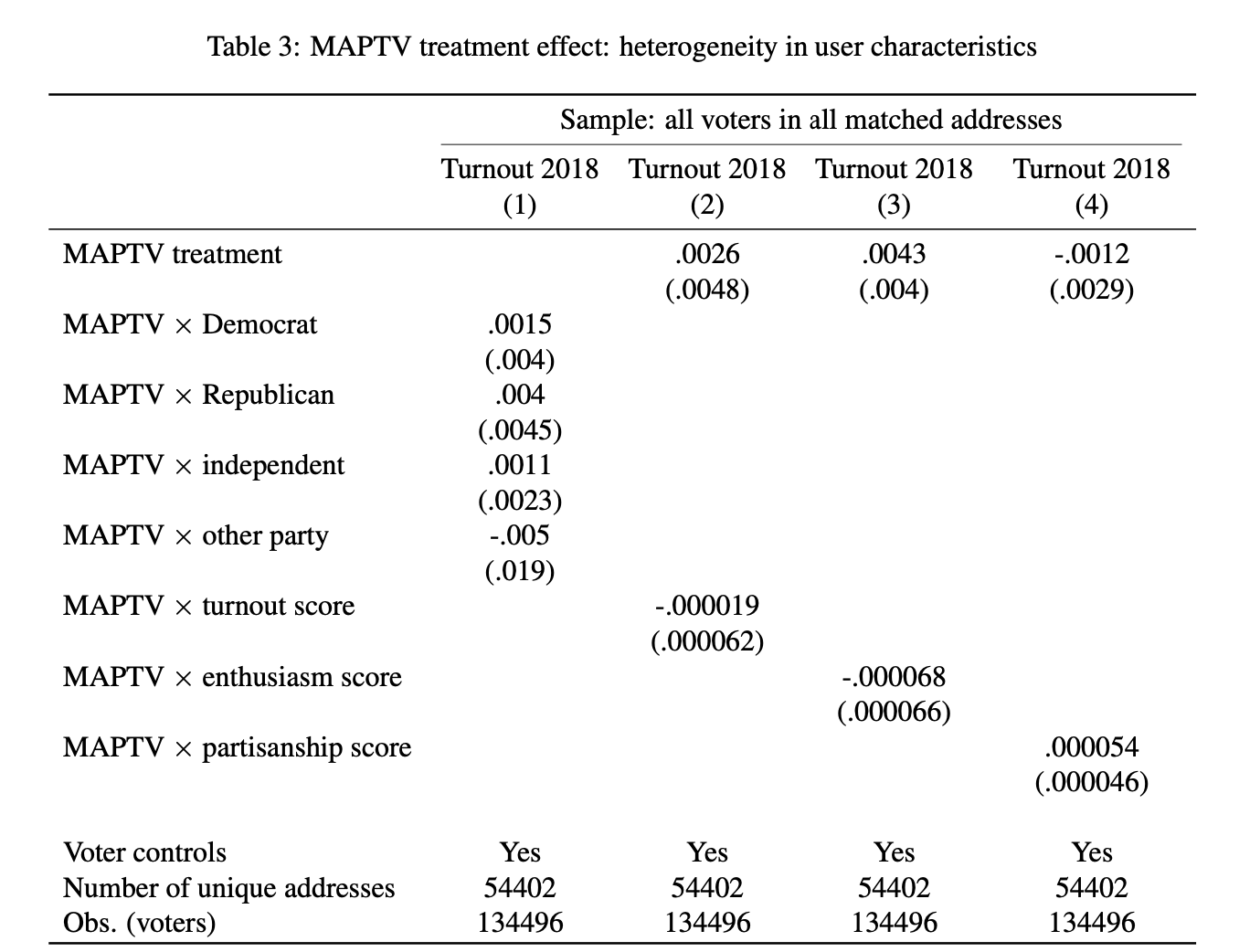

Table 2 reports results on turnout. Column (1) reports raw differences, column (2) reports our main, pre-specified regression, and column (3) reports the pre-specified LASSO regression. The results from these three analyses are similar, with positive but small and statistically insignificant estimates of the MAPTV effect on voter turnout. These effect sizes range from an increase of 0.03 percentage points in column (3) to 0.13 percentage points in column (1). We conclude that the treatment and control groups had similar levels of voter turnout.We consider several reasons why the treatment and control voted at similar rates. One po- tential explanation is that the BallotReady user base is a particularly engaged set of voters and increasing their turnout is difficult. Overall, the turnout in the sample is 74%, far above aver- age for a midterm election. These high rates of turn out may reflect BallotReady users’ general enthusiasm or impacts the experience has on turnout regardless of treatment status. In the latter case, any positive effect from the control, information-only site would attenuate the treatment effect estimates as we are only able to observe treatment and control differences. With respect to the former case, we test whether lower propensity voters are more affected by the treatment in Table 3 which includes treatment effects interacted with party, TargetSmart’s turnout score (0-100), TargetSmart’s voter “enthusiasm” score (0-100), and TargetSmart’s partisanship score (0-100). These regressions were not pre-specified but provide some evidence for the explanation. The point estimates in columns (2) and (3) imply that higher propensity and more enthusiastic voters had small treatment effects. However, this evidence is limited in not being statistically significant and in still having fairly small point estimates. The lowest propensity or least enthusiastic voters in these regressions would have their turnout increased by 0.3 or 0.4 percentage points respectively.

Another explanation for the results is that they were attenuated by the matching procedure. If, for example, a user was matched to two voters, on average we would expect only half the effect on each of the voters assuming there were no spillovers. Appendix Table A1 runs our pre-specified regression adjusting for this effect, adding in the interaction between treatment and “treatment intensity,” defined as the ratio of BallotReady users at an address to the number of voter matches. Appendix Table A1 also reports differences between unique matches and matches of 2+ voters. These analyses do not change the results in Table 2 substantially, as most of the differences between these groups are not statistically significant and are small in magnitude.

Finally, the MAPTV tool may simply not have been effective in increasing turnout. Such an effect could occur either because the online nature of the tool meant a substantial portion of users did not engage strongly with the tool. Vote planning done, say, through in-person canvass- ing would have been effective in this population but not the online version we provided. Vote planning of any sort might have been ineffective in this population, in a result similar toBond et al. [2012]. With respect to engagement, available metrics suggest it was reasonably high. Of the 119,211 users recruited, 45,792 chose a polling time, 43,610 chose a polling location, and 13,710 signed up for reminders. Although issues with user behavior databases prevent us from determining the exact fraction of usage by treatment, most available metrics suggest that any leakage was low.4 Thus, probably around 70-75% of the treatment group ultimately used the first few features of the tool although fewer used reminders. Some attenuation of an effect could have come from this fact although it would not be a major cause.

Notes: Each column in this table originates from a separate WLS regression of 2018 turnout on Make a Plan to Vote (MAPTV) treatment. Weights are number of BallotReady users di- vided by number of voters in the address (capped at maximum of 1). Omitted group is the default information-only BallotReady page users, and this can be considered as the control group. Voter controls in Column (2) include state dummies interacted with 2008-2016 turnout dummies (non-interacted between turnout dummies), state interacted with age and age squared, state interacted with race interacted with party dummies. Voter controls in Column (3) are se- lected from double-lasso variable selection method (Urminsky et al. 2016). Total 241 variables are chosen from state interacted with 2008-2016 turnout dummies (interacted with each other) interacted with party dummies, state interacted with age and age squared, state interacted with number of voters at address, and county interacted with gender, race, and party. Standard errors (in parentheses below coefficients) are clustered by address. *** p<0.01, ** p<0.05, * p<0.1.

Notes: Each column in this table originates from a separate WLS regression of 2018 turnout on Make a Plan to Vote (MAPTV) treatment interacted with variety of variables. Weights are number of BallotReady users divided by number of voters in the address (capped at maximum of 1). Partisanship, turnout, and enthusiasm scores (∈ [0, 100]) are calculated and provided by TargetSmart. Voter controls are same as Column 2 of Table 2 (see description in the note of Table 2). In addition to these controls, we control for partisanship, turnout, and enthusiasm scores in each column of this table. Standard errors (in parentheses below coefficients) are clustered by address. *** p<0.01, ** p<0.05, * p<0.1.

References

- Alexandre Belloni, Victor Chernozhukov, and Christian Hansen. Inference on treatment effects after selection among high-dimensional controls. The Review of Economic Studies, 81(2): 608–650, 2014.

- Robert M Bond, Christopher J Fariss, Jason J Jones, Adam DI Kramer, Cameron Marlow, Jaime E Settle, and James H Fowler. A 61-million-person experiment in social influence and political mobilization. Nature, 489(7415):295, 2012.

- Dustin Cho. Acting on the intent to vote: a voter turnout experiment. Available at SSRN 1402025, 2008.

- Allison Dale and Aaron Strauss. Don’t forget to vote: text message reminders as a mobilization tool. American Journal of Political Science, 53(4):787–804, 2009.

- Neil Malhotra, Melissa R Michelson, Todd Rogers, and Ali Adam Valenzuela. Text messages as mobilization tools: The conditional effect of habitual voting and election salience. American Politics Research, 39(4):664–681, 2011.

- David W Nickerson. Is voting contagious? evidence from two field experiments. American political Science review, 102(1):49–57, 2008.

- Holly Teresi and Melissa R Michelson. Wired to mobilize: The effect of social networking messages on voter turnout. The Social Science Journal, 52(2):195–204, 2015.